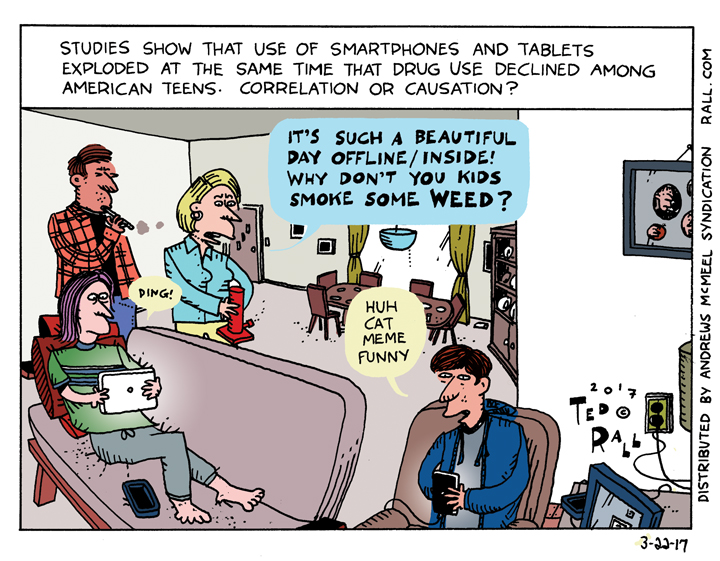

Studies show that the use of smartphones and tablets exploded at the same time that drug use declined among American teens. Correlation or causation?

SYNDICATED COLUMN: The NSA Loses in Court, but the Police State Rolls On

Edward Snowden has been vindicated.

This week marks the first time that a court – a real court, not a sick joke of a kangaroo tribunal like the FISA court, which approves every government request and never hears from opponents – has ruled on the legality of one of the NSA’s spying programs against the American people.

Verdict: privacy 1, police state 0.

Yet the police state goes on. Which is what happens in, you know, a police state. The pigs always win.

A unanimous three-judge ruling by the US Court of Appeals for the Second Circuit, in New York, states unequivocally that the Obama Administration’s interpretation of the USA Patriot Act is fatally flawed. Specifically, it says, Congress never intended for Section 215 to authorize the bulk interception and storage of telephony metadata of domestic phone calls: the calling number, the number called, the length of the call, the locations of both parties, and so on. In fact, the court noted, Congress never knew what the NSA was up to before Snowden spilled the beans.

On the surface, this is good news.

It will soon have been two years since Snowden leaked the NSA’s documents detailing numerous government efforts to sweep up every bit and byte of electronic communications that they possibly can — turning the United States into the Orwellian nightmare of 1984, where nothing is secret and everything can and will be used against you. Many Americans are already afraid to tell pollsters their opinions for fear of NSA eavesdropping.

One can only imagine how chilling the election of a neo-fascist right-winger (I’m talking to you, Ted Cruz and Scott Walker) as president would be. Not that I’m ready for Hillary “privacy for me, not for thee” Clinton to know all my secrets.

Until now, most action on the reform front has taken place abroad, especially in Europe, where concern about privacy online has led individuals as well as businesses to snub American Internet and technology companies, costing Silicon Valley billions of dollars, and accelerated construction of a European alternative to the American dominated “cloud.”

Here in the United States, the NSA continued with business as usual. As far as we know, the vast majority of the programs revealed by Snowden are still operational; there are no doubt many frightening new ones launched since 2013. Members of Congress were preparing to renew the disgusting Patriot Act this summer. One bright spot was the so-called USA Freedom Act, which purports to roll back bulk metadata collection, but privacy advocates say the legislation had been so watered down, and so tolerant of the NSA’s most excessive abuses, that it was just barely more than symbolic.

Like the Freedom Act, this ruling is largely symbolic.

The problem is, it’s not the last word. The federal government will certainly appeal to the U.S. Supreme Court, which could take years before hearing the case. Even in the short run, the court didn’t slap the NSA with an injunction to halt its illegal collection of Americans’ metadata.

What’s particularly distressing is the fact that the court’s complaint is about the interpretation of the Patriot Act rather than its constitutionality. The Obama Administration’s interpretation of Section 215 “cannot bear the weight the government asks us to assign to it, and that it does not authorize the telephone metadata program,” said the court ruling. However: “We do so comfortably in the full understanding that if Congress chooses to authorize such a far-reaching and unprecedented program, it has every opportunity to do so, and to do so unambiguously.”

Well, ain’t that peachy.

As a rule, courts are reluctant to annul laws passed by the legislative branch of government on the grounds of unconstitutionality. In the case of NSA spying on us, however, the harm to American democracy and society is so extravagant, and the failure of the system of checks and balances to rein in the abuses so spectacular, that the patriotic and legal duty of every judge is to do whatever he can or she can to put an end to this bastard once and for all.

It’s a sad testimony to the cowardice, willful blindness and lack of urgency of the political classes that the New York court kicked the can down the road, rather than declare the NSA’s metadata collection program a clear violation of the Fourth Amendment’s right to be free from unreasonable search and seizure.

(Ted Rall, syndicated writer and the cartoonist for The Los Angeles Times, is the author of the new critically-acclaimed book “After We Kill You, We Will Welcome You Back As Honored Guests: Unembedded in Afghanistan.” Subscribe to Ted Rall at Beacon.)

COPYRIGHT 2015 TED RALL, DISTRIBUTED BY CREATORS.COM

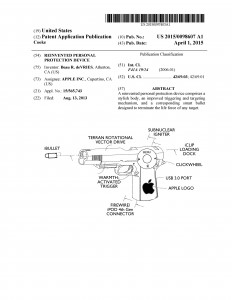

Exclusive: Apple To Debut Its Apple Gun Smart Firearm in 2016

Originally published by ANewDomain.net:

aNewDomain exclusive Auckland, NZ, 01.04.2015 — Apple is soon to announce a smart firearm and will unveil the new weapon, called the Apple Gun, at a special scheduled to coincide with next spring’s Las Vegas Shooting Hunting and Outdoor Trade show, the largest gathering of gun fans and dealers in the nation.

aNewDomain exclusive Auckland, NZ, 01.04.2015 — Apple is soon to announce a smart firearm and will unveil the new weapon, called the Apple Gun, at a special scheduled to coincide with next spring’s Las Vegas Shooting Hunting and Outdoor Trade show, the largest gathering of gun fans and dealers in the nation.

The event, according to documents leaked to aNewDomain reporters at the Auckland Gun Show in New Zealand, will be timed to coincide with Apple’s 40th anniversary celebration in early April 2016.

The Apple Gun is a continuation of the Cupertino giant’s strategy of expanding its reach beyond personal computing into other products, like its planned Apple Car and widely-anticipated Apple Drone, sources close to the effort told aNewDomain.

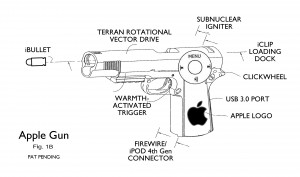

Legal correspondent T. E. Wing unearthed Apple’s Apple Gun patent application, as filed with US Patent & Trademark Office, in August 2014. A rendering of the Apple Gun as Apple lawyers drew it in the Apple Gun patent application is below. At this writing, the patent is still pending.

Apple PR woman Katie Cotton refused to comment on the Apple Gun information that sources revealed to aNewDomain, saying Apple does not confirm or deny “rumors or unannounced products, however slim and sexy.” Apple CEO Tim Cook, contacted via his Google + account, refused to comment on the report, saying simply: “Imagine.”

Apple PR woman Katie Cotton refused to comment on the Apple Gun information that sources revealed to aNewDomain, saying Apple does not confirm or deny “rumors or unannounced products, however slim and sexy.” Apple CEO Tim Cook, contacted via his Google + account, refused to comment on the report, saying simply: “Imagine.”

Luckily, we don’t have to. Early ad copy obtained by aNewDomain reveals a forthcoming Apple-branded firearm that is is “beautiful, simple and accurate.” Not necessarily affordable to every consumer, Apple Gun is about to do for personal protection what the Apple iPhone did to the telephone, sources say.

The ad copy, leaked to aNewDomain at the New Zealand firearms convention early today, continues:

Imagine a firearm that doesn’t make a sound when fired. A weapon that doesn’t look ominous, but to the contrary, sports beautiful design that fits in at the office, at Whole Foods, even in the classroom. A gun with state-of-the-art quality front and center, making accidental maimings a thing of the past.

“A weapon for design freaks and liberals,” comments one source, speaking on condition of anonymity. “It’s brilliant. And deadly.”

“Though it’s going to surprise some of the company’s crunchy NPR-listening client base, this is a logical move for Apple,” said Shell Jackson, an analyst at Goldman Sachs’ tech division. And it’s just the beginning.

“Guns are a $32 billion a year business in the United States. Not only is Apple Gun a smartplay for market share in an industry that hasn’t seen a major design development since the demise of the flintlock, it will familiarize the red states – currently PC country – with the Apple brand.” He adds:

“Once Apple Gun has achieved significant market share, it will expand throughout the weapons and munitions sector into high-caliber heavy weapons, long-range missiles and laserguided bombs, armed drones, maybe even nuclear.”

Featuring smooth, clean lines, a clickwheel and translucent whiteness, Apple Gun is the culmination of Steve Jobs’ last product initiative, Project DeepSix, which was initiated 17 months before his death with the goal of reimagining the handgun. “What if guns were invented today? They wouldn’t be hypermasculine death machines for dimwitted nativist jocks. They’d be pretty. They’d have multiple functionalities: text messaging and other telecommunications, music player, flashlight, sports fitness applications, all tethered to your iPhone and uploaded to iCloud. They’d simplify our lives with beautiful design,” Jobs, a Buddhist known for his angry rants, told his team from his hospital bed, angrily yet calmly.

The Apple Gun “will free the gun from the gun owner,” the source said.

According to an internal company memo accidentally shared to a Dropbox folder owned by an Indian restaurant in Mountain View, California, Apple Gun “will revolutionize the gun concept” by “freeing the gun from the gun owner.”

Let’s say, for example, that you’re worried about someone breaking into your home while you’re at work. Simply leave Apple Gun on a table near your front door. A laser-activated sensor will notify you if someone enters, flash their image to your iPhone, which then relays to your Apple Watch, which must be fully charged to work properly.

If you don’t know the person, press “Y” to fire. If it’s your cleaning lady, press “N.”

What if you want to kill someone, or many someones, but you are too busy to stop them or lay in wait for them? Subscribers to the premium version of Apple’s iCloud storage service can load high-resolution photographs or personal descriptions of the target subjects, rank them in order of kill priority, sync up their Apple Gun, and violà! Just like that, thanks to Apple’s patented iHead facial-recognition software, whenever the intended victim steps anywhere near the line of fire, termination is automatic and guaranteed.

What if you want to kill someone, or many someones, but you are too busy to stop them or lay in wait for them? Subscribers to the premium version of Apple’s iCloud storage service can load high-resolution photographs or personal descriptions of the target subjects, rank them in order of kill priority, sync up their Apple Gun, and violà! Just like that, thanks to Apple’s patented iHead facial-recognition software, whenever the intended victim steps anywhere near the line of fire, termination is automatic and guaranteed.

The twin problem is still being worked on.

A brief look at the Apple Gun patent application reveals a number of intriguing firearm tech features.

Merging traditional bullets and heat-seeking missile technology, Apple’s proprietary iBullet ammunition costs $100 each and will be sold in clips of 20.

Thanks to special casing and the replacement of gunpowder by the release of an electric charge, the dynamic rotation of the iBullet is fueled by the spin of the Earth’s electromagnetic field, working around the laws of physics that create that unpleasant bang in traditional modern firearms.

The last thing you’ll hear before an Apple Gun kills you is a soft “poof,” like the sound emanating from an especially mellow baby blowing a very tiny bubble.

Relatively small at the traditional barrel equivalent of .375 caliber, Apple Bullets locate a target’s heartbeat and follow it anywhere, even around corners. Upon striking flesh, DNA analysis determines the species of the victim as well as its obesity (or lack thereof), telling the bullet to travel the precise distance to the center of its heart before releasing its explosive charge.

Apple engineers on the gun team spent two years killing, and attempting to kill various life forms at a secret research facility in Nevada’s Black Rock Desert, near the site of the annual Burning Man festival. It is pictured at left.

“It’s a combination of experimentation, algorithms and good luck,” explained one member of the team on the condition of anonymity. “Provided the gun is fully charged” – battery power, company insiders concede, has not yet been fully optimized – “you can take out a rhesus monkey, a rattlesnake, a groundhog, a capybara or a drug-enraged human sociopath at 250 meters with 99.965% accuracy,” he said.

I for one am excited — no, thrilled — about the forthcoming Apple Gun. You know, I wouldn’t buy a gun. But you can take my smartgun out of my cold dead hands.

For aNewDomain, I’m Red Tall.

Sony Hackers: Was It Really N. Korea? Why Some FBI Folk Doubt It

Originally published at ANewDomain.net:

If you were impressed by how fast the FBI placed the blame for the Sony entertainment hacks on North Korea, you weren’t alone. Internet forensics are notoriously complicated, so this was obviously the result of amazingly efficient detective work, right?

Perhaps not.

A number of security experts doubt the US government’s claim of certainty in their accusation.

“The FBI says the attack came from IP addresses — unique computer addresses — that trace back to North Korea,” NPR reports. But those could be spoofed.

“The fact that data was relayed through IPs associated with North Korea is not a smoking gun,” Scott Petry, a network security analyst with Authentic8, told the network. “There are products today that will route traffic through IP addresses around the world.”

The FBI also points to malware used in the Sony attacks. Strings of that code, the feds say, are identical to those used in previous attacks known to have been carried out by North Korean hackers. Perry says that doesn’t mean anything either. Malware gets recycled by hackers all the time. “It’s like saying, ‘My God, this bank robbery was conducted using a Kalashnikov rifle — it must be the Russians who did it!'”

US government officials told the media that they found communications between the hackers that indicated their language of origin was Korean, and other experts say that conclusion is tentative and premature at best.

“Although it’s possible that these messages were written by people whose native language is Korean, it is far more likely that they were Russians,” said Shlomo Argamon, computer science professor at the Illinois Institute of Technology and chief scientist with Taia Global, after examining the writing style.

Finally, there’s the motive problem, as Wired puts it.

We’ve been told that the hacks were carried out on the order of a petulant dictator out to censor a film that disrespected his majesty, Seth Rogen and James Franco’s assassination comedy “The Interview.” But the demands of the hackers seem to align closer to a financial shakedown. Russians, then? In particular, the demands that Sony “pay proper monetary compensation” or face further attacks, points to someone other than a nation-state. Plus, for what it’s worth, North Korea has angrily denied involvement.

So if it wasn’t North Korea – or more accurately, if the US government isn’t 100% certain that it was North Korea – what are they saying that it was – or more accurately, that they are 100% certain that it was?

Robert Graham, CEO of Errata Security, speculated to Wired that a political hack within the FBI “wanted it to be North Korea so much that they just threw away caution.” Once the Obama administration repeatedly told the media that they knew it was North Korea, that became an official narrative that could never be walked back. “There’s this whole groupthink that happens, and once it becomes the message, it’s really hard to say no it’s not this.”

We have seen government groupthink before.

Within hours after the first plane hit the World Trade Center on September 11, 2001, network anchors and US government officials alike were openly jumping to the conclusion that Al Qaeda under the leadership of Osama bin Laden had to be responsible. As with the Sony hacks, what began as pure speculation based on circumstantial evidence – the theatrical nature of the attacks, their simultaneity and so on – soon became an official narrative that no one ever dared question, even when bin Laden denied responsibility (he had, on the other hand, claimed to have been behind the 1998 bombings of the US embassies in East Africa).

There are two parts of the equation here: responsibility and certainty. Who did it? How sure are we?

The FBI appears to be playing fast and loose with the latter question, much in the way that the Bush administration claimed to have been certain that the government of Iraqi dictator Saddam Hussein possessed weapons of mass destruction during the 2002-2003 run-up to the invasion of that country. The lie was not in claiming that Saddam possessed WMDs. The lie was claiming to be sure.

Bin Laden may well have been the sole financier and leader of the 9/11 plot, just as the North Korean government could be responsible for the Sony hacks. In both cases, however, a rush to judgment in anticipation of the facts may prevent some or all of the truth from ever coming to light. In the 9/11 case, for example, considerable evidence points to Islamic Jihad, a radical organization based in Egypt, as well as Saudi financiers. Pinning the blame exclusively on bin Laden and Al Qaeda let those guilty parties escape investigation, and perhaps punishment.

Similarly, the FBI’s premature passing of blame on the government of President Kim Jong-un could be muddying the waters, thus allowing the actual responsible parties to continue their activities and setting the stage for their next hack attack. Not to mention, is it really a good idea to antagonize a paranoid, nuclear-armed adversary that is already convinced the US intends to invade and occupy it, by falsely accusing them?

It would be nice, though perhaps too much to ask, for the United States government to seek the truth in a calm, deliberative manner. The media can wait after an attack to learn who’s to blame. So can we.

CES 2015: Staring Down the Spectrum of Consumer Desire

Originally published at ANewDomain.net:

It occurs to me, while following announcements of new gadgets coming out this week at CES 2015 in Las Vegas, that new technologies fall into different spectrums of desire. From a consumerism standpoint, new tech falls into four discrete categories (assuming one can afford them):

- Love: Products that, either consciously or unconsciously, you’ve always wanted and that you fall in love with the second you see them. For me, the musical equivalent is the Ramones: I loved them the first time I heard them. The iPod was like that. As soon as a friend explained that you could put 10,000 songs on that one tiny device, I was in, no further sales pitch required.

- Like: Stuff you don’t immediately care about, but come to desire after you learn more about it, whether by watching other people use them or learning more about them some other way. Musical equivalent: the Sex Pistols. First time I spun the disc, they sounded like an unholy racket … though I sensed something deeper, wittier and even smarter under all that noise. Twenty listens later, I was a fan. When tablets first came out, I didn’t grasp the appeal of a screen larger than a phone but smaller than a laptop — that is on all the time. Watching friends use theirs brought me around. For others, it was advertising.

- Dislike: Things that you personally dislike, but have to get in order to participate in society. I feel that way about The New Yorker magazine and social networks like Twitter and Facebook — I don’t care for them at all, yet I buy in. Mostly in order to avoid feeling disconnected from my friends and colleagues.

- Goods you won’t buy. No matter what.

So lets look at some promising goods CES is full of this year, and see what spectrum of desire they fall under.

4K Televisions

These are TVs with higher resolution than the standard 1,080-pixel wide model that’s probably in your living room right now. They’ve been around for a few years, but the price points (roughly $7,800 in 2012) have been way too high for the average American. Now that 4K televisions are being sold for under $1,000, and are actually approaching the $450 average price of a standard flat screen, we are being told that 4K is about to become the new standard.

That’s probably true. Streaming content providers like Netflix are teaming up with manufacturers to make it so, in the words of Star Trek’s Jean-Luc Picard, but I suspect that most Americans currently categorize the purchase of a 4K television somewhere between categories two and three, between “maybe” and “only if they make me.”

Mainly, this is because most human beings’ eyes can’t tell the difference between their current flat screen, which really looks damned good if you think about what television used to look like, and the newfangled ones. Inertia rules: why replace a perfectly good TV?

Well, because they’ll make us. For instance: bye-bye Betamax, hello VHS.

Nevertheless, 4K purchases will increase in the next couple of years as the old flat screens are discontinued and dismissed by appliance store salesman as obsolete, not because of pressing demand by viewers for higher resolution, but because the industry is moving that way. You’ll love it soon, I bet.

Self-Driving Cars

This year’s CES is showcasing the early stages of driverless cars in the form of vehicles that park themselves and then come back to you all by their lonesome. This happens as though delivered by an invisible valet with apps that unlock the door, start the engine and adjust the internal temperatures so that everything is just perfect before you get inside.

Hmm.

Polls show that Americans don’t really know how to feel about driverless transportation technology. They think they’re cool, but also disquieting. Some understand the efficiency and safety advantages, such as the fact that a highway could hold two or three times as many cars at rush hour while traveling at higher rates of speed, and that a computer can react more quickly than a human being distracted by a text message.

For geeks, driverless technology clearly fits into category one, a must-have. For the rest of us, there will probably be buy-in — but not before a lot of education. Driverless cars don’t mark the rise of Skynet, but there’s still a creep factor in surrendering control of the road to a device you barely understand. (If you don’t believe me, take the AirTrain into JFK airport. No conductor. I’m not a fan.)

And don’t get me started on the possibility that hackers could tap into your auto’s controls and drive you off a cliff.

I’m 95 percent sure driverless cars will become a thing. But there’s going to be a long psychological adjustment period.

Internet of Things and All Those Little Gadgets

Energy Management appliances and devices that use sensors, algorithms and predictive technology to save energy on your refrigerators and home cooling and heating, on the other hand, will likely enjoy intrinsic, immediate appeal to many, if not most consumers. Who doesn’t like to pay less?

Until now the sales of devices like the high-tech thermostat Nest have been constrained by their relatively high cost. As prices become more affordable and accessible, they will become standard in many homes.

Robotics, wearables and virtual reality products, on the other hand, will divide consumers into each of my four categories of consumerist desire: love, like, dislike and hate.

Google Glass and the Apple Watch anticipated for later this year are, depending on who you are, either the coolest or derpiest things ever. People either want to be seen everywhere with them or not caught dead near them. Some people even want to ban them. I believe that these will be divisive for the foreseeable future, until the marketplace and popular culture arrives at some sort of consensus over whether these are must-haves or must-avoids.

Me? I’m all in when it comes to 3D printing. How about you?

ANewDomain.net Essay: Don’t Hire Anyone Over 30: Ageism in Silicon Valley

Originally published at ANewDomain.net:

Most people know that Silicon Valley has a diversity problem. Women and ethnic minorities are underrepresented in Big Tech. Racist and sexist job discrimination are obviously unfair. They also shape a toxic, insular white male “bro” culture that generates periodic frat-boy eruptions (see, for example, the recent wine-fueled rant of an Uber executive who mused — to journalists — that he’d like to pay journalists to dig up dirt on journalists who criticize Uber. What could go wrong?)

After years of criticism, tech executives are finally starting to pay attention — and some are promising to recruit more women, blacks and Latinos.

This is progress, but it still leaves Silicon Valley with its biggest dirty secret: rampant, brazen age discrimination.

“Walk into any hot tech company and you’ll find disproportionate representation of young Caucasian and Asian males,” University of Washington computer scientist Ed Lazowska told The San Francisco Chronicle. “All forms of diversity are important, for the same reasons: workforce demand, equality of opportunity and quality of end product.”

Overt bigotry against older workers — we’re talking about anyone over 30 here — has been baked into the Valley’s infantile attitudes since the dot-com crash 14 years ago.

Life may begin at 50 elsewhere, but in the tech biz the only thing certain about middle age is unemployment.

The tone is set by the industry’s top CEOs. “When Mark Zuckerberg was 22, he said five words that might haunt him forever. ‘Younger people are just smarter,’ the Facebook wunderkind told his audience at a Y Combinator event at Stanford University in 2007. If the merits of youth were celebrated in Silicon Valley at the time, they have become even more enshrined since,” Alison Griswold writes in Slate.

It’s illegal, under the federal Age Discrimination in Employment Act of 1967, to pass up a potential employee for hire, or to fail to promote, or to fire a worker, for being too old. But don’t bother telling that to a tech executive. What used to be a meritocracy has become a don’t-hire-anyone-over-30 (certainly not over 40) — right under the nose of the tech media.

Which isn’t surprising. The supposed watchdogs of the Fourth Estate are wearing the same blinders as their supposed prey. The staffs of news sites like Valleywag and Techcrunch skew as young as the companies they cover.

A 2013 BuzzFeed piece titled ” What It’s Like Being The Oldest BuzzFeed Employee” (subhead: “I am so, so lost, every workday.”) by a 53-year-old BuzzFeed editor “old enough to be the father of nearly every other editorial employee” (average age: late 20s) reads like a repentant landlord-class sandwich-board confession during China’s Cultural Revolution: “These whiz-kids completely baffle me, daily. I am in a constant state of bafflement at BF HQ. In fact, I’ve never been more confused, day-in and day-out, in my life.” It’s the most pathetic attempt at self-deprecation I’ve read since the transcripts of Stalin’s show trials.

A few months later, the dude got fired by his boss (15 years younger): “This is just not working out, your stuff. Let’s just say, it’s ‘creative differences.’”

Big companies are on notice that they’re on the wrong side of employment law. They just don’t care.

Slate reports: “In 2011, Google reached a multimillion-dollar settlement in a…suit with computer scientist Brian Reid, who was fired from the company in 2004 at age 54. Reid claimed that Google employees made derogatory comments about his age, telling him he was ‘obsolete,’ ‘sluggish,’ and an ‘old fuddy-duddy’ whose ideas were ‘too old to matter.’ Other companies—including Apple, Facebook, and Yahoo—have gotten themselves in hot water by posting job listings with ‘new grad‘ in the description. In 2013, Facebook settled a case with California’s Fair Employment and Housing Department over a job listing for an attorney that noted ‘Class of 2007 or 2008 preferred.’”

Because the fines and settlements have been mere slaps on the wrist, the cult of the Youth Bro is still going strong.

To walk the streets of Austin during tech’s biggest annual confab, South by Southwest Interactive, is to experience a society where Boomers and Gen Xers have vanished into a black hole. Photos of those open-space offices favored by start-ups document workplaces where people over 35 are as scarce as women on the streets of Kandahar. From Menlo Park to Palo Alto, token fortysomethings wear the nervous shrew-like expressions of creatures in constant danger of getting eaten — dressed a little too young, heads down, no eye contact, hoping not to be noticed.

“Silicon Valley has become one of the most ageist places in America,” Noam Scheiber reported in a New Republic feature that describes tech workers as young as 26 seeking plastic surgery in order to stave off the early signs of male pattern baldness and minor skin splotches on their faces.

Whatever you do, don’t look your age — unless your age is 22.

“Robert Withers, a counselor who helps Silicon Valley workers over 40 with their job searches, told me he recommends that older applicants have a professional snap the photo they post on their LinkedIn page to ensure that it exudes energy and vigor, not fatigue,” Scheiber writes. “He also advises them to spend time in the parking lot of a company where they will be interviewing so they can scope out how people dress.”

The head of the most prominent start-up incubator told The New York Times that most venture capitalists in the Valley won’t take a pitch from anyone over 32.

In early November, VCs handed over several hundred thousand bucks to a 13-year-old.

Aside from the legal and ethical considerations, does Big Tech’s cult of youth matter? Scheiber says hell yes: “In the one corner of the American economy defined by its relentless optimism, where the spirit of invention and reinvention reigns supreme, we now have a large and growing class of highly trained, objectively talented, surpassingly ambitious workers who are shunted to the margins, doomed to haunt corporate parking lots and medical waiting rooms, for reasons no one can rationally explain. The consequences are downright depressing.”

One result of ageism that jumps to the top of my mind is brain drain. Youthful vigor is vital to success in business. So is seasoned experience. The closer an organization reflects society at large, the smarter it is.

A female colleague recently called to inform me that she was about to get laid off from her job as an editor and writer for a major tech news site. (She was, of course, the oldest employee at the company.) Naturally caffeinated, addicted to the Internet and pop culture, she’s usually the smartest person in the room. I see lots of tech journalism openings for which she’d be a perfect fit, yet she’s at her wit’s end. “I’m going to jump off a bridge,” she threatened. “What else can I do? I’m 45. No one’s ever going to hire me.” Though I urged her not to take the plunge, I couldn’t argue with her pessimism. Objectively, though, I think the employers who won’t talk to her are idiots. For their own sakes.

Just a month before, I’d met with an executive of a major tech news site who told me I wouldn’t be considered for a position due to my age. “Aside from being stupid,” I replied, “you do know that’s illegal, right?”

“No one enforces it,” he shrugged. He’s right. The feds don’t even keep national statistics on hiring by age.

The median American worker is age 42. The median age at Facebook, Google, AOL and Zynga, on the other hand, is 30 or younger. Twitter, which recently got hosed in an age discrimination lawsuit, has a median age of 28.

Big Tech doesn’t want you to know they don’t hire middle-aged Americans. Age data was intentionally omitted from the recent spate of “we can do better” mea culpa reports on company diversity.

It’s easy to suss out why: they prefer to hire cheaper, more disposable, more flexible (willing to work longer hours) younger workers. Apple and Facebook recently made news by offering to freeze its female workers’ eggs so they can delay parenthood in order to devote their 20s and 30s to the company.

The dirty secret is not so secret when you scour online want ads. “Many tech companies post openings exclusively for new or recent college graduates, a pool of candidates that is overwhelmingly in its early twenties,” Verne Kopytoff writes in Fortune.

“It’s nothing short of rampant,” said UC David comp sci professor Norm Matloff, about age discrimination against older software developers. Adding to the grim irony for Gen Xers: today’s fortysomethings suffered reverse age discrimination — old people in power screwing the young — at the hands of Boomers in charge when they were entering the workforce.

Once too young to be trusted, now too old to get hired.

Ageist hiring practices are so over-the-top illegal, you have to wonder: do these jerks have in-house counsel?

Kopytoff: “Apple, Facebook, Yahoo, Dropbox, and video game maker Electronic Arts all recently listed openings with ‘new grad’ in the title. Some companies say that recent college graduates will also be considered and then go on to specify which graduating classes—2011 or 2012, for instance—are acceptable.”

The feds take a dim view of these ads.

“In our view, it’s illegal,” Raymond Peeler, senior attorney advisor at the Equal Employment Opportunity Commission, told Kopytoff. “We think it deters older applicants from applying.” Gee, you think? But the EEOC has yet to smack a tech company with a big fine.

The job market is supposed to eliminate efficiencies like this, where companies that need experienced reporters fire them while retaining writers who are so wet behind the ears you want to check for moss. But ageism is so ingrained into tech culture that it’s part of the scenery, a cultural signifier like choosing an iPhone over Android. Everyone takes it for granted.

Scheiber describes a file storage company’s annual Hack Week, which might as well be scientifically designed in order to make adults with kids and a mortgage run away screaming: “Dropbox headquarters turns into the world’s best-capitalized rumpus room. Employees ride around on skateboards and scooters, play with Legos at all hours, and generally tool around with whatever happens to interest them, other than work, which they are encouraged to set aside.”

No matter how cool a 55-year-old you are, you’re going to feel left out. Which, one suspects, is the point.

It’s impossible to overstate how ageist many tech outfits are.

Electronic Arts contacted Kopytoff to defend its “new grad” employment ads, only to confirm their bigotry. The company “defended its ads by saying that it hires people of all ages into its new grad program. To prove the point, the company said those accepted into the program range in age from 21 to 35. But the company soon had second thoughts about releasing such information, which shows a total absence of middle-aged hires in the grad program, and asked Fortune to withhold that detail from publication. (Fortune declined.)”

EA’s idea of age diversity is zero workers over 35.

Here is one case where an experienced, forty- or fifty- or even sixtysomething in-house lawyer or publicist might have saved them some embarrassment — and legal exposure.

In the big picture, Silicon Valley is hardly an engine of job growth; they haven’t added a single net new job since 1998. “Big” companies like Facebook and Twitter only hire a few thousand workers each. Instagram famously only had 13 when it went public. They have little interest in contributing to the commonweal. Nevertheless, tech ageism in the tiny tech sector has a disproportionately high influence on workplace practices in other workspaces. If it is allowed to continue, it will spread to other fields.

It’s hard to see how anything short of a massive class-action lawsuit — one that dings tech giants for billions of dollars — will make Big Tech hire Xers, much less Boomers.

SYNDICATED COLUMN: Game of Drones – New Generation of Drones Already Choose Their Own Targets

“The drone is the ultimate imperial weapon, allowing a superpower almost unlimited reach while keeping its own soldiers far from battle,” writes New York Times reporter James Risen in his important new book “Pay Any Price: Greed, Power, and Endless War.” “Drones provide remote-control combat, custom-designed for wars of choice, and they have become the signature weapons of the war on terror.”

But America’s monopoly on death from a distance is coming to an end. Drone technology is relatively simple and cheap to acquire — which is why more than 70 countries, plus non-state actors like Hezbollah, have combat drones.

The National Journal’s Kristin Roberts imagines how drones could soon “destabilize entire regions and potentially upset geopolitical order”: “Iran, with the approval of Damascus, carries out a lethal strike on anti-Syrian forces inside Syria; Russia picks off militants tampering with oil and gas lines in Ukraine or Georgia; Turkey arms a U.S.-provided Predator to kill Kurdish militants in northern Iraq who it believes are planning attacks along the border. Label the targets as terrorists, and in each case, Tehran, Moscow, and Ankara may point toward Washington and say, we learned it by watching you. In Pakistan, Yemen, and Afghanistan.”

Next: SkyNet.

SkyNet, you recall from the Terminator movies, is a computerized defense network whose artificial intelligence programming leads it to self-awareness. People try to turn it off; SkyNet interprets this as an attack — on itself. Automated genocide follows in an instant.

In an article you should read carefully because/despite that fact that it will totally freak you out, The New York Times reports that “arms makers…are developing weapons that rely on artificial intelligence, not human instruction, to decide what to target and whom to kill.”

More from the Times piece:

“Britain, Israel and Norway are already deploying missiles and drones that carry out attacks against enemy radar, tanks or ships without direct human control. After launch, so-called autonomous weapons rely on artificial intelligence and sensors to select targets and to initiate an attack.

“Britain’s ‘fire and forget’ Brimstone missiles, for example, can distinguish among tanks and cars and buses without human assistance, and can hunt targets in a predesignated region without oversight. The Brimstones also communicate with one another, sharing their targets.

[…]“Israel’s antiradar missile, the Harpy, loiters in the sky until an enemy radar is turned on. It then attacks and destroys the radar installation on its own.

“Norway plans to equip its fleet of advanced jet fighters with the Joint Strike Missile, which can hunt, recognize and detect a target without human intervention.”

“An autonomous weapons arms race is already taking place,” says Steve Omohundro, a physicist and AI specialist at Self-Aware Systems. “They can respond faster, more efficiently and less predictably.”

As usual, the United States is leading the way toward dystopian apocalypse, setting precedents for the use of sophisticated, novel, more efficient killing machines. We developed and dropped the first nuclear bombs. We unleashed the drones. Now we’re at the forefront of AI missile systems.

The first test was a disaster: “Back in 1988, the Navy test-fired a Harpoon antiship missile that employed an early form of self-guidance. The missile mistook an Indian freighter that had strayed onto the test range for its target. The Harpoon, which did not have a warhead, hit the bridge of the freighter, killing a crew member.”

But we’re America! We didn’t let that slow us down: “Despite the accident, the Harpoon became a mainstay of naval armaments and remains in wide use.”

U-S-A! U-S-A!

I can see you tech geeks out there, shaking your heads over your screen, saying to yourselves: “Rall is paranoid! This is new technology. It’s bound to improve. AI drones will become more accurate.”

Not necessarily.

Combat drones have hovered over towns and villages in Afghanistan and Pakistan for the last 13 years, killing thousands of people. The accuracy rate is less than impressive: 3.5%. That’s right: 96.5% of the victims are, by the military’s own assessment, innocent civilians.

The Pentagon argues that its new generation of self-guided hunter-killers are merely “semiautonomous” and so don’t run afoul of a U.S. rule against such weapons. But only the initial launch is initiated by a human being.” It will be operating autonomously when it searches for the enemy fleet,” Mark Gubrud, a physicist who is a member of the International Committee for Robot Arms Control, told the Times. “This is pretty sophisticated stuff that I would call artificial intelligence outside human control.”

If that doesn’t worry you, this should: it’s only a matter of time before other countries, some of which don’t like us, get these too.

Not much time.

(Ted Rall, syndicated writer and cartoonist, is the author of the new critically-acclaimed book “After We Kill You, We Will Welcome You Back As Honored Guests: Unembedded in Afghanistan.” Subscribe to Ted Rall at Beacon.)

COPYRIGHT 2014 TED RALL, DISTRIBUTED BY CREATORS.COM

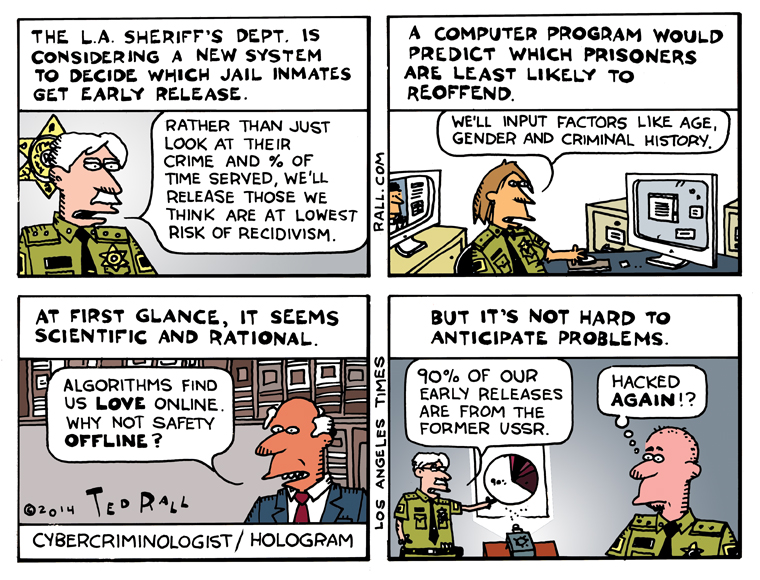

LOS ANGELES TIMES CARTOON: RoboSheriff

Computer algorithms drive online dating sites that promise to hook you up with a compatible mate. They help retailers suggest that, because you liked this book or that movie, you’ll probably be into this music. So it was probably inevitable that programs based on predictive algorithms would be sold to law enforcement agencies on the pitch that they’ll make society safe.

The LAPD feeds crime data into PredPol, which then spits out a report predicting — reportedly with impressive accuracy — where “property crimes specifically, burglaries and car break-ins and thefts are statistically more likely to happen.” The idea is, if cops spend more time in these high-crime spots, they can stop crime before it happens.

Chicago police used predictive algorithms designed by an Illinois Institute of Technology engineer to create a 400-suspect “heat list” of “people in the city of Chicago supposedly most likely to be involved in violent crime.” Surprisingly, of these Chicagoans — who receive personal visits from high-ranking cops telling them that they’re being watched — have never committed a violent crime themselves. But their friends have, and that can be enough.

In other words, today’s not-so-bad guys may be tomorrow’s worst guys ever.

But math can also be used to guess which among yesterday’s bad guys are least likely to reoffend. Never mind what they did in the past. What will they do from now on? California prison officials, under constant pressure to reduce overcrowding, want to limit early releases to the inmates most likely to walk the straight and narrow.

Toward that end, Times’ Abby Sewell and Jack Leonard report that the L.A. Sheriff’s Department is considering changing its current evaluation system for early releases of inmates to one based on algorithms:

Supporters argue the change would help select inmates for early release who are less likely to commit new crimes. But it might also raise some eyebrows. An older offender convicted of a single serious crime, such as child molestation, might be labeled lower-risk than a younger inmate with numerous property and drug convictions.

The Sheriff’s Department is planning to present a proposal for a “risk-based” release system to the Board of Supervisors.

“That’s the smart way to do it,” interim Sheriff John L. Scott said. “I think the percentage [system, which currently determines when inmates get released by looking at the seriousness of their most recent offense and the percentage of their sentence they have already served] leaves a lot to be desired.”

Washington state uses a similar system, which has a 70% accuracy rate. “A follow-up study…found that about 47% of inmates in the highest-risk group returned to prison within three years, while 10% of those labeled low-risk did.”

No one knows which ex-cons will reoffend — sometimes not even the recidivist himself or herself. No matter how we decide which prisoners walk free before their end of their sentences, whether it’s a judgment call rendered by corrections officials generated by algorithms, it comes down to human beings guessing what other human beings do. Behind every high-tech solution, after all, are programmers and analysts who are all too human. Even if that 70% accuracy rate improves, some prisoners who have been rehabilitated and ought to have been released will languish behind bars while others, dangerous despite best guesses, will go out to kill, maim and rob.

If the Sheriff’s Department moves forward with predictive algorithmic analysis, they’ll be exchanging one set of problems for another.

Technology is morally neutral. It’s what we do with it that makes a difference.

That, and how many Russian hackers manage to game the system.

(Ted Rall, cartoonist for The Times, is also a nationally syndicated opinion columnist and author. His new book is Silk Road to Ruin: Why Central Asia is the New Middle East.)