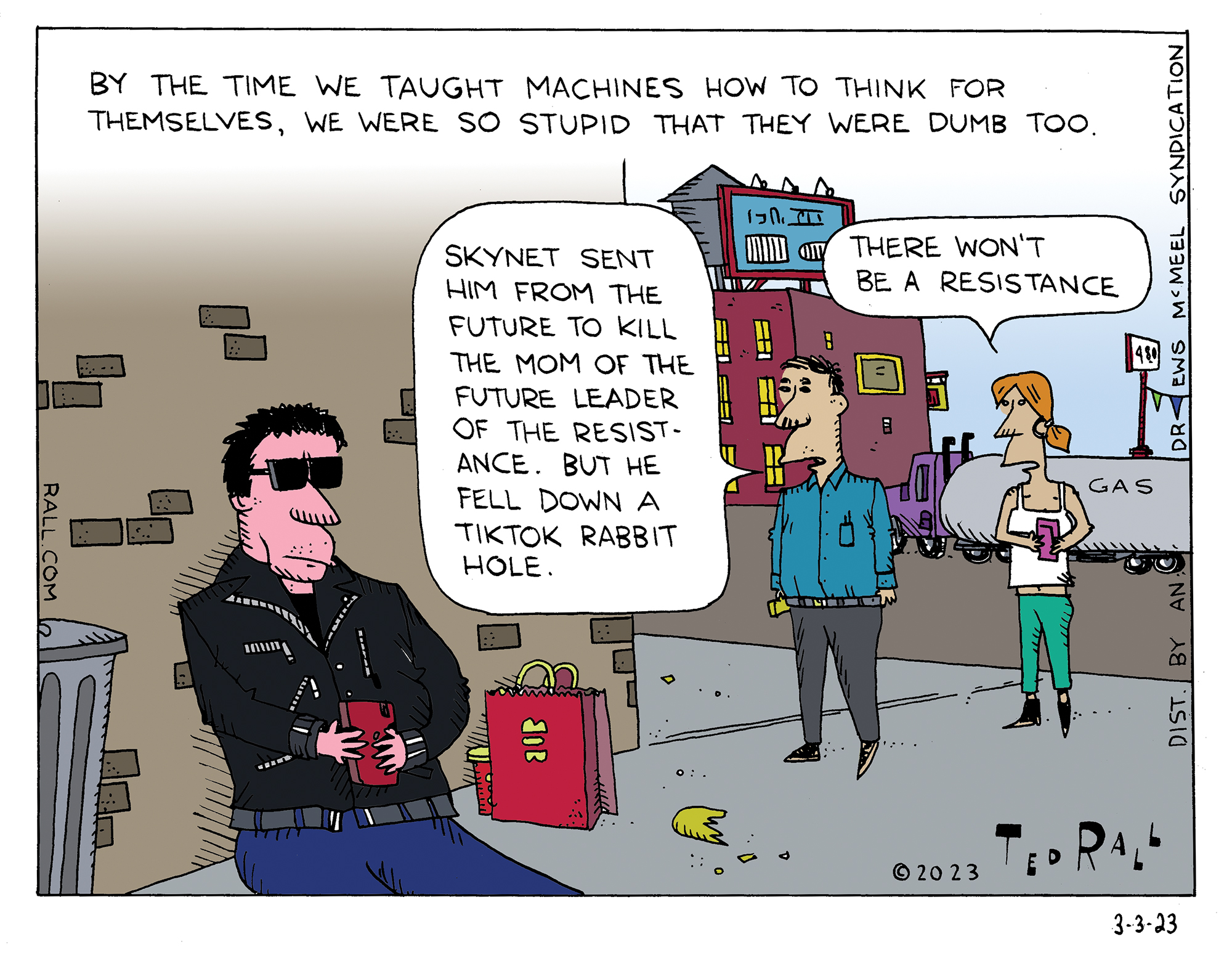

Open AI’s ChatGPT has captured the imagination of the American public with the prospect that artificial intelligence has finally arrived at the high level promised by science fiction. But many tests find that the product is more inferior than one might expect.

We Have Seen the Future, and It Is Stupid

Ted Rall

Ted Rall is a syndicated political cartoonist for Andrews McMeel Syndication and WhoWhatWhy.org and Counterpoint. He is a contributor to Centerclip and co-host of "The TMI Show" talk show. He is a graphic novelist and author of many books of art and prose, and an occasional war correspondent. He is, recently, the author of the graphic novel "2024: Revisited."

1 Comment. Leave new

I did some university courses at an AI department in the early 00s and they literally taught the history of the field as a series of hype-cycles: expert systems in the 60-70s, neural networks in the 80s, etc.

Each time (1) some actual if modest breakthroughs were followed by (2) increasingly absurd claims about achieving human level intelligence, then followed by (3) much reduced claims in very circumscribed areas of applications (e.g. checking X-rays for cancer), and (4) even those would hit certain limitations.

The actual coursework would mostly lay out the strength but also crucially the limitations of each method, and learning a method effectively entailed learning how to deal with both.

[In a nutshell the hard limits have to do with 1) language-like systems lacking deep understanding of simple concepts in the way any three year old has and 2) “neural network” like systems – including today’s machine learning – really being applications of statistics on large datasets that may show interesting – even novel – insights, but can also be misleading and always need to be interpreted. But Interpretation is difficult ;-).]

Since then, a few genuine novel advancements were made, some hodge-podge “hybrid” systems introduced aiming to combine the strengths of different approaches, plus of course much increased computing power, plus absurdly large datasets from trawling the interwebs in a semi-legal manner, cameras on cars, etc.

None of the fundamental limitations were ever addressed (because they are damned difficult to address…)

These limitations are not a secret – literally tens or hundred of thousands of people who took AI classes, even quite basic ones, in the last decades are familiar with them.

Why are so called business people, journalists, etc. still captured by the opening phase of the 1980s hype cycle AGAIN? (The same hype cycle already expanded to hit the general public during the dot-com bubble).

Why aren’t journalists talking to any of the practitioners in the field whose knowledge of the limitations of AI is their daily bread and butter? Or at least talking to their niece who took some intro to AI course as part of her computer science degree?

It does seem baffling.

Btw this is not a “killjoy” thing since there are circumscribed applications where “AI” methods already are making a huge difference and are changing white collar job descriptions e.g. in the area of patent search, translation management systems etc. There certainly is no shortage of actual news stories of the actual impact of computing technology, including AI systems, on the actual work of actual people…