In election after election, tinier slices of ever-more-specific demographic groups in fewer battleground states determined the outcome. Finally, A.I. narrowed down the process to the perfect precision of a single person.

We Need a Universal High Income

“Get a job!” That’s the clichéd response to panhandlers and anyone else who complains of being broke. But what if you can’t?

“Get a job!” That’s the clichéd response to panhandlers and anyone else who complains of being broke. But what if you can’t?

That dilemma is the crux of an evolving silent crisis that threatens to undermine the foundation of the American economic model.

Two-thirds of gross domestic product, most of the economy, is fueled by personal consumer spending. Most spending is sourced from personal income, overwhelmingly from salaries paid by employers. But employers will need fewer and fewer employees.

You don’t need a business degree to understand the nature of the doom loop. A smaller labor force earns a smaller national income and spends less. As demand shrinks, companies lay off many of their remaining workers, who themselves spend less, on and on until we’re all in bread lines.

Assuming there are any charities collecting enough donations to pay for the bread.

The workforce participation rate has already been shrinking for more than two decades, forcing fewer workers to pay higher taxes. It’s about to get much worse.

Workers are already being replaced by robotics, artificial intelligence and other forms of automation. Estimates vary about how many and how quickly these technologies will kill American jobs as they scale and become widely accepted, but there’s no doubt the effects will be huge and that we will see them sooner rather than later. A report by MIT and Boston University finds that two million manufacturing jobs will disappear within the coming year; Freethink sounds the death knell for 65% of retail gigs in the same startlingly short time span. A different MIT study predicts that “only 23%” of current worker wages will be replaced by automation, but it won’t happen immediately “because of the large upfront costs of AI systems.” Disruptive technologies like A.I. will create new jobs. Overall, however, McKinsey consulting group believes that 12 million Americans will be kicked off their payrolls by 2030.

“Probably none of us will have a job,” Elon Musk said earlier this year. “If you want to do a job that’s kinda like a hobby, you can do a job. But otherwise, A.I. and the robots will provide any goods and services that you want.”

For this to work, Musk observed, idled workers would have to be paid a “universal high income”—the equivalent of a full-time salary, but to stay at home. This is not to be conflated with the “universal basic income” touted by people like Andrew Yang, which is a nominal annual government subsidy, not enough to pay all your expenses.

“It will be an age of abundance,” Musk predicts.

The history of technological progress suggests otherwise. From the construction of bridges across the Thames during the late 18th and early 19th centuries that sidelined London’s wherry men who ferried passengers and goods, to the deindustrialization of the Midwest that has left the heartland of the United States with boarded-up houses and an epic opioid crisis, to Uber and Lyft’s solution to a non-existent problem that now has yellow-taxi drivers committing suicide, ruling-class political and business elites rarely worry about the people who lose their livelihoods to “creative destruction.”

Whether you’re a 55-year-old wherry man or cabbie or accountant who loses your job through no fault of your own other than having the bad luck to be born at a time of dramatic change in the workplace, you always get the same advice. Pay to retrain in another field—hopefully you have savings to pay for it, hopefully your new profession doesn’t become obsolete too! “Embrace a growth mindset.” Whatever that means. Use new tech to help you with your current occupation—until your boss figures out what you’re up to and decides to make do with just the machine.

Look at it from their—the boss’s—perspective. Costs are down, profits are up. They don’t know you, they don’t care about you, guilt isn’t a thing for them. What’s not to like about the robotics revolution?

Those profits, however, belong to us at least as much as they do to “them”—employers, bosses, stockholders. Artificial intelligence and robots are not magic; they were not conjured up from thin air. These technologies were created and developed by human beings on the backs of hundreds of millions of American workers in legacy and now-moribund industries. If the wealthy winners of this latest tech revolution are too short-sighted and cruel to share the abundance with their fellow citizens—if for no better reason than to save their skins from a future violent uprising and their portfolios from disaster when our consumerism-based economy comes crashing down—we should force them to do so.

(Ted Rall (Twitter: @tedrall), the political cartoonist, columnist and graphic novelist, co-hosts the left-vs-right DMZ America podcast with fellow cartoonist Scott Stantis. His latest book, brand-new right now, is the graphic novel 2024: Revisited.)

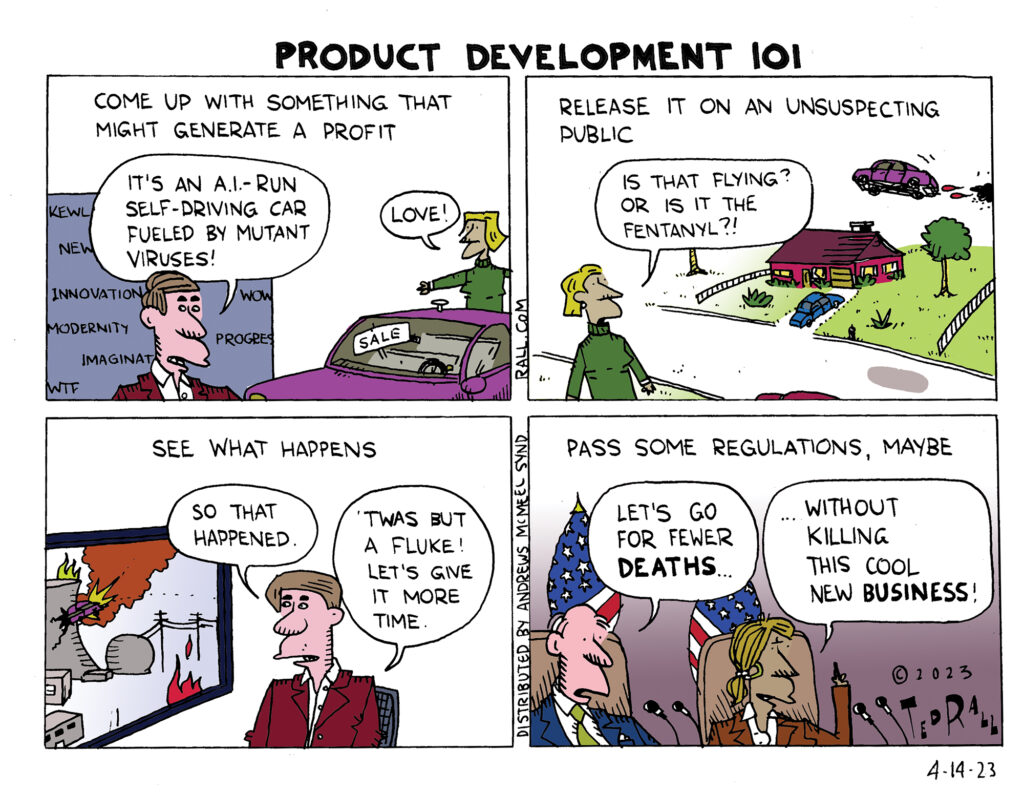

Ready Or Not

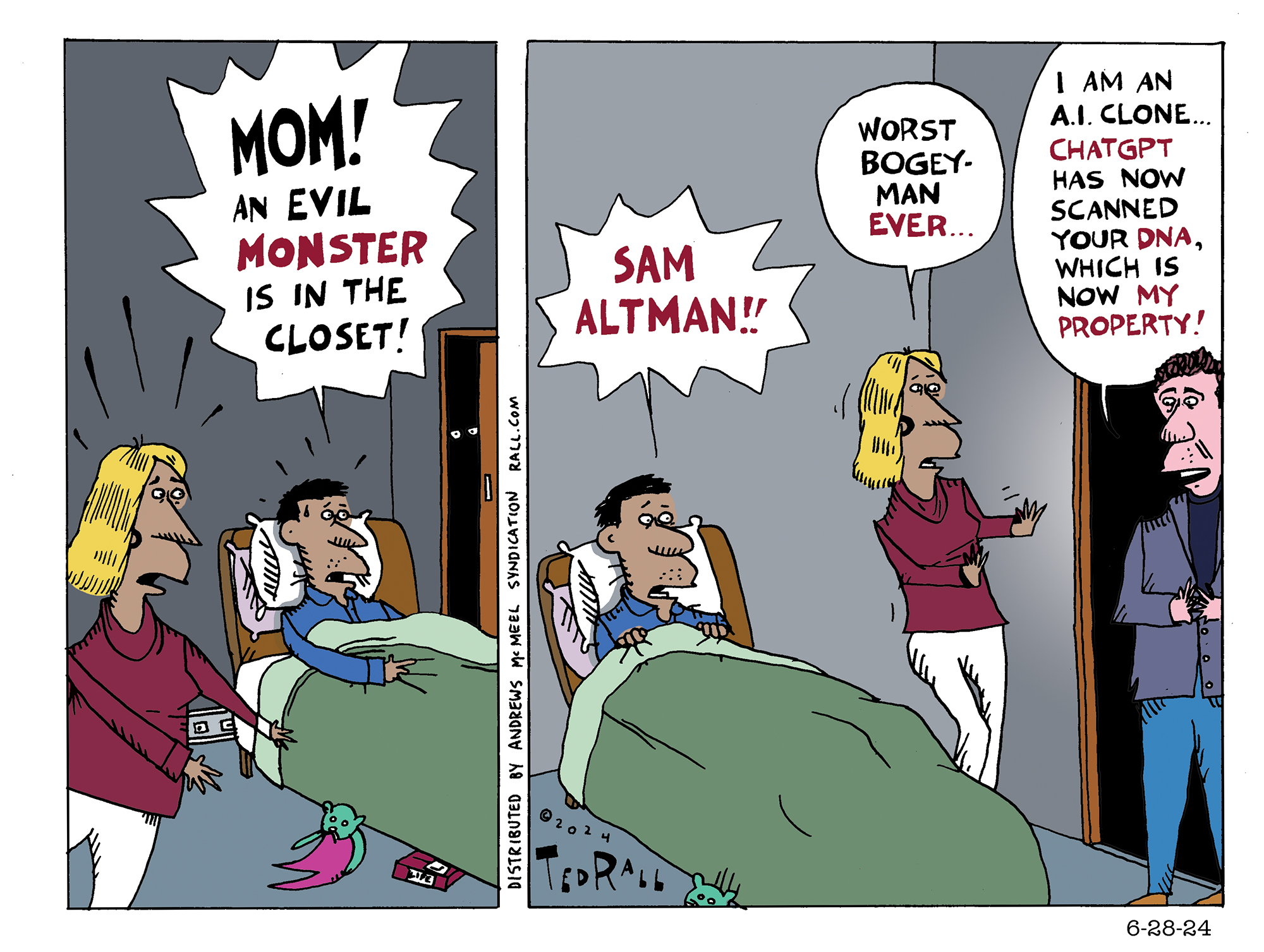

Despite considerable evidence that artificial intelligence is far from ready for prime time, just about every technology you can think of is being outfitted with the feature. From search engines to automobiles, we’re putting our lives into the “hands” of A.I. that advises us, literally, to eat rocks.

Deep Fake A.I. Ads Might Kill Us All

Seeing is believing. In the age of AI, it shouldn’t be.

In June, for example, Ron DeSantis’ presidential campaign issued a YouTube ad that used generative artificial-intelligence technology to produce a deep-fake image of former President Donald Trump hugging appearing to hug Dr. Anthony Fauci, the former COVID-19 czar despised by anti-vax and anti-lockdown Republican voters. Video of Elizabeth Warren has been manipulated to make her look as though she was calling for Republicans to be banned from voting. She wasn’t. As early as 2019, a Malaysian cabinet minister was targeted by a AI-generated video clip that falsely but convincingly portrayed him as confessing to having appeared in a gay sex video.

Ramping up in earnest with the 2024 presidential campaign, this kind of chicanery is going to start happening a lot. And away we go: “The Republican National Committee in April released an entirely AI-generated ad meant to show the future of the United States if President Joe Biden is re-elected. It employed fake but realistic, photos showing boarded up storefronts, armored military patrols in the streets, and waves of immigrants creating panic,” PBS reported.

“Boy, will this be dangerous in elections going forward,” former Obama staffer Tommy Vietor told Vanity Fair.

Like the American Association of Political Consultants, I’ve seen this coming. My 2022 graphic novel The Stringer depicts how deep-fake videos and other falsified online content of political leaders might even cause World War III. Think that’s an overblown fear? Think again. Remember how residents of Hawaii jumped out of their cars and jumped down manholes after state authorities mistakenly issued a phone alert of an impending missile strike? Imagine how foreign officials might respond to a high-quality deep-fake video of, for example, President Joe Biden declaring war on North Korea or of Israeli Prime Minister Benjamin Netanyahu seeming to announce an attack against Iran. What would you do if you were a top official in the DPRK or Iranian governments? How would you determine whether the threat were real?

Here in the U.S., generative-AI-created political content could will stoke racial, religious and partisan hatred that could lead to violence, not to mention interfering with elections.

Private industry and government regulators understand the danger. So far, however, proposed safeguards fall way short of what would be needed to ensure that the vast majority of political content is what it seems to be.

The Federal Election Committee has barely begun to consider the issue. The real action so far, such as it is, has been on the Silicon Valley front. “Starting in November, Google will mandate all political advertisements label the use of artificial intelligence tools and synthetic content in their videos, images and audio,” Politico reports. “Google’s latest rule update—which also applies to YouTube video ads—requires all verified advertisers to prominently disclose whether their ads contain ‘synthetic content that inauthentically depicts real or realistic-looking people or events.’ The company mandates the disclosure be clear and conspicuous’ on the video, image or audio content. Such disclosure language could be ‘this video content was synthetically generated,’ or ‘this audio was computer generated,’ the company said.”

Labeling will be useless and ineffective. Synthetic content that deep-fakes the appearance of a politician or a group of people doing, or saying something that they actually never did or said sticks in people’s minds even after they’ve been informed that it’s wrong—especially when the material confirms or fits with viewers’ pre-existing assumptions and world views.

The only solution is to make sure they are never seen at all. AI-generated deep fakes of political content should be banned online, whether with or without a warning label.

The culprit is the “illusory truth effect” of basic human psychology: once you have seen something, you can’t unsee it—especially if it’s repeated. Even after you are told that something you’ve seen was fake and to disregard it, it continues to influence you as if you still took it at face value. Trial lawyers are well aware of this phenomenon, which is why they knowingly make arguments and allegations that are bound to be ordered stricken by a judge from the court record; jurors have heard it, they assume there’s at least some truth to it, and it affects their deliberations.

We’ve seen how pernicious misinformation like the Russiagate hoax and Bush’s lie that Saddam was aligned with Al Qaeda can be—over a million people dead—and how such falsehoods retain currency long after they’ve been debunked. Typical efforts to correct the record, like “fact-checking” news sites, are ineffective and sometimes even serve to reinforce the falsehood they’re attempting to correct or undermine. And those examples are ideas expressed through mere words.

Real or fake, a picture speaks more loudly than a thousand words. False visuals are even more powerful than falsehoods expressed through prose. Even though there is no contemporaneous evidence that any Vietnam War veteran was ever accosted by antiwar protesters who spit on them, many Vietnam vets began to say it had happened to them—after they viewed Sylvester Stallone’s monologue in the movie “Rambo: First Blood,” which was likely intended as a metaphor. Yet, throughout the late 1970s, no vet ever made such a claim, even in personal correspondence. They probably even believe it; they “remember” what never occurred.

Warning labels can’t reverse the powerful illusory truth effect. Moreover, there is nothing to stop someone from reproducing and distributing a properly-warning-labeled deep-fake AI-generated campaign attack ad, stripped of any indication that the content isn’t what it seems.

AI is here to stay. So are bad actors and scammers. Particularly in the political space, First Amendment-guaranteed free speech must be protected. But thoughtful government regulation of AI, with strong enforcement mechanisms including meaningful penalties, will be essential if we want to avoid chaos and worse.

(Ted Rall (Twitter: @tedrall), the political cartoonist, columnist and graphic novelist, co-hosts the left-vs-right DMZ America podcast with fellow cartoonist Scott Stantis. You can support Ted’s hard-hitting political cartoons and columns and see his work first by sponsoring his work on Patreon.)

DMZ America Podcast #84: Debating the Debt Ceiling, Biden’s Secret Papers and Potpourri

Internationally-syndicated Editorial Cartoonists Ted Rall and Scoot Stantis analyze the news of the day. Starting with a brisk debate about whether or not the Debt Ceiling should be lifted or if there should be one at all. Next, Ted and Scott weigh in on Secret Documents President Biden had piled up in his garage. Does this preclude a run for reelection in 2024? Lastly, a potpourri of topics ranging from the Wyoming Legislature proposing a ban on the purchase of electric vehicles to the Russian troop buildup in the west of Ukraine to recent projections that 90% of online content will be generated by AI by 2025. (This podcast is not, btw.)

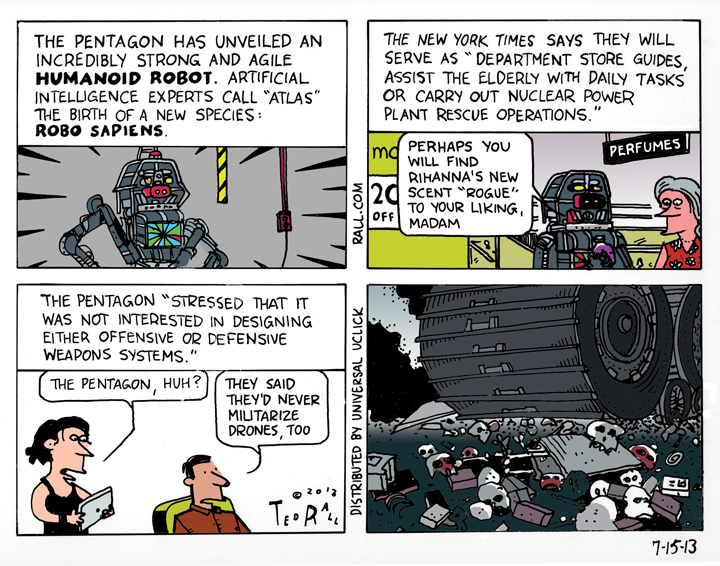

SYNDICATED COLUMN: Game of Drones – New Generation of Drones Already Choose Their Own Targets

“The drone is the ultimate imperial weapon, allowing a superpower almost unlimited reach while keeping its own soldiers far from battle,” writes New York Times reporter James Risen in his important new book “Pay Any Price: Greed, Power, and Endless War.” “Drones provide remote-control combat, custom-designed for wars of choice, and they have become the signature weapons of the war on terror.”

But America’s monopoly on death from a distance is coming to an end. Drone technology is relatively simple and cheap to acquire — which is why more than 70 countries, plus non-state actors like Hezbollah, have combat drones.

The National Journal’s Kristin Roberts imagines how drones could soon “destabilize entire regions and potentially upset geopolitical order”: “Iran, with the approval of Damascus, carries out a lethal strike on anti-Syrian forces inside Syria; Russia picks off militants tampering with oil and gas lines in Ukraine or Georgia; Turkey arms a U.S.-provided Predator to kill Kurdish militants in northern Iraq who it believes are planning attacks along the border. Label the targets as terrorists, and in each case, Tehran, Moscow, and Ankara may point toward Washington and say, we learned it by watching you. In Pakistan, Yemen, and Afghanistan.”

Next: SkyNet.

SkyNet, you recall from the Terminator movies, is a computerized defense network whose artificial intelligence programming leads it to self-awareness. People try to turn it off; SkyNet interprets this as an attack — on itself. Automated genocide follows in an instant.

In an article you should read carefully because/despite that fact that it will totally freak you out, The New York Times reports that “arms makers…are developing weapons that rely on artificial intelligence, not human instruction, to decide what to target and whom to kill.”

More from the Times piece:

“Britain, Israel and Norway are already deploying missiles and drones that carry out attacks against enemy radar, tanks or ships without direct human control. After launch, so-called autonomous weapons rely on artificial intelligence and sensors to select targets and to initiate an attack.

“Britain’s ‘fire and forget’ Brimstone missiles, for example, can distinguish among tanks and cars and buses without human assistance, and can hunt targets in a predesignated region without oversight. The Brimstones also communicate with one another, sharing their targets.

[…]“Israel’s antiradar missile, the Harpy, loiters in the sky until an enemy radar is turned on. It then attacks and destroys the radar installation on its own.

“Norway plans to equip its fleet of advanced jet fighters with the Joint Strike Missile, which can hunt, recognize and detect a target without human intervention.”

“An autonomous weapons arms race is already taking place,” says Steve Omohundro, a physicist and AI specialist at Self-Aware Systems. “They can respond faster, more efficiently and less predictably.”

As usual, the United States is leading the way toward dystopian apocalypse, setting precedents for the use of sophisticated, novel, more efficient killing machines. We developed and dropped the first nuclear bombs. We unleashed the drones. Now we’re at the forefront of AI missile systems.

The first test was a disaster: “Back in 1988, the Navy test-fired a Harpoon antiship missile that employed an early form of self-guidance. The missile mistook an Indian freighter that had strayed onto the test range for its target. The Harpoon, which did not have a warhead, hit the bridge of the freighter, killing a crew member.”

But we’re America! We didn’t let that slow us down: “Despite the accident, the Harpoon became a mainstay of naval armaments and remains in wide use.”

U-S-A! U-S-A!

I can see you tech geeks out there, shaking your heads over your screen, saying to yourselves: “Rall is paranoid! This is new technology. It’s bound to improve. AI drones will become more accurate.”

Not necessarily.

Combat drones have hovered over towns and villages in Afghanistan and Pakistan for the last 13 years, killing thousands of people. The accuracy rate is less than impressive: 3.5%. That’s right: 96.5% of the victims are, by the military’s own assessment, innocent civilians.

The Pentagon argues that its new generation of self-guided hunter-killers are merely “semiautonomous” and so don’t run afoul of a U.S. rule against such weapons. But only the initial launch is initiated by a human being.” It will be operating autonomously when it searches for the enemy fleet,” Mark Gubrud, a physicist who is a member of the International Committee for Robot Arms Control, told the Times. “This is pretty sophisticated stuff that I would call artificial intelligence outside human control.”

If that doesn’t worry you, this should: it’s only a matter of time before other countries, some of which don’t like us, get these too.

Not much time.

(Ted Rall, syndicated writer and cartoonist, is the author of the new critically-acclaimed book “After We Kill You, We Will Welcome You Back As Honored Guests: Unembedded in Afghanistan.” Subscribe to Ted Rall at Beacon.)

COPYRIGHT 2014 TED RALL, DISTRIBUTED BY CREATORS.COM