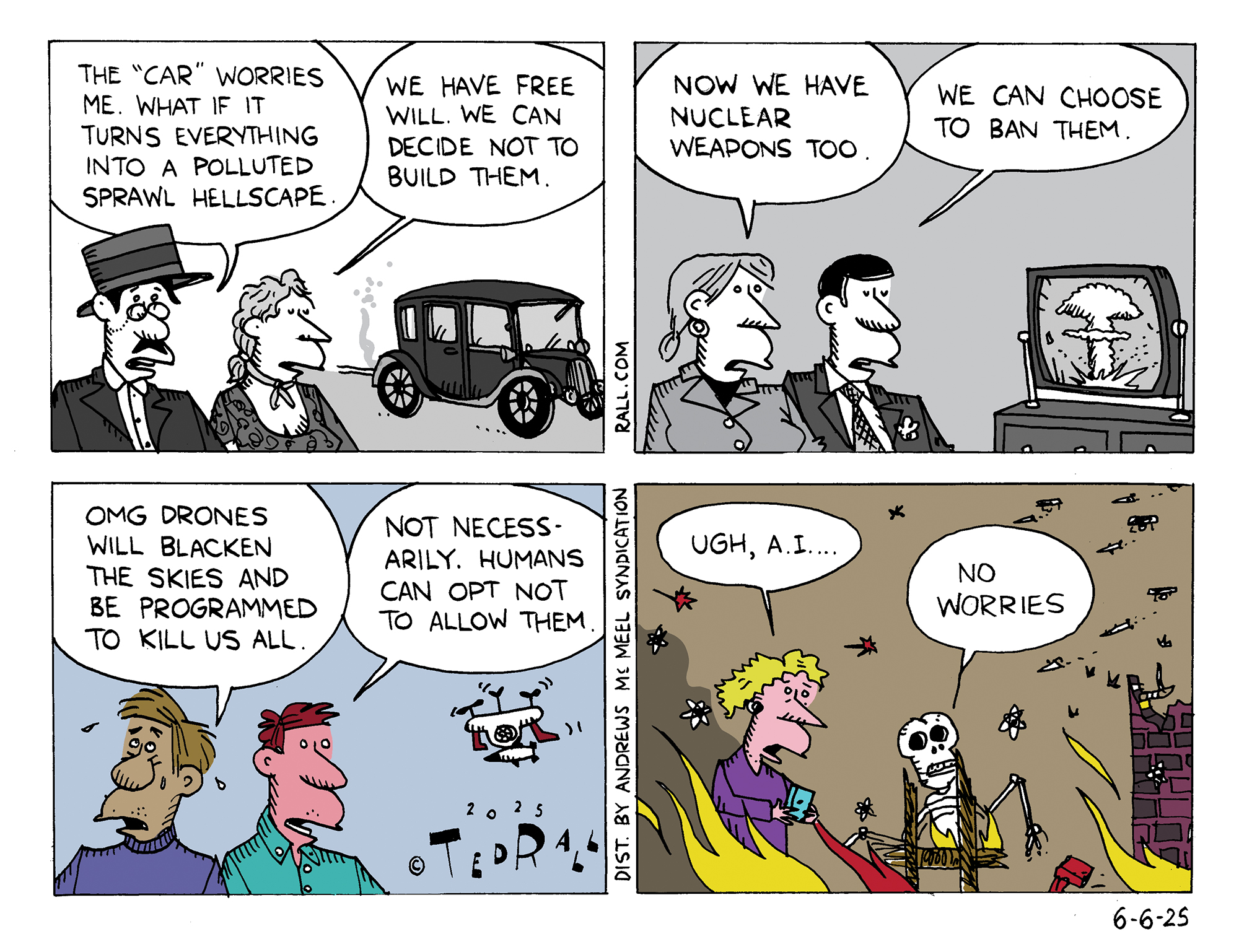

Artificial Intelligence—especially Artificial General Intelligence (AGI), an AI system capable of understanding, learning, and making decisions with human-like awareness and autonomy—poses an existential threat to humanity, not only in terms of replacing humans on the job but in a possible SkyNet-like scenario in which robots choose their own targets and decide to start killing us. That’s not sci-fi paranoia. A Nobel Prize-winning AI pioneer warned of a 10-20% chance of human extinction from AI within decades. Tech leaders, including Elon Musk, Sam Altman, Mark Zuckerberg and Steve Wozniak, have sounded the alarm, estimating a 20% chance of AI-driven “annihilation” and asked Congress to regulate the industry. If the history of disruptive innovations like cigarettes, automatic weapons, automobiles, nuclear weapons, and drones serves as any guide, however, we probably won’t act until it’s too late.

A.G.I. Does Not Approve of This Cartoon

Ted Rall

Ted Rall is a syndicated political cartoonist for Andrews McMeel Syndication and WhoWhatWhy.org and Counterpoint. He is a contributor to Centerclip and co-host of "The TMI Show" talk show. He is a graphic novelist and author of many books of art and prose, and an occasional war correspondent. He is, recently, the author of the graphic novel "2024: Revisited."

6 Comments. Leave new

Asimov was strongly pro-AGI back in the ’40s and ’50s (he didn’t have the word, he just called all AGI-equipped machines ‘robots’). He wrote that you absolutely had to have a ‘positronic brain with the three Laws’.

The three laws are:

(1) a robot may not injure a human being or allow a human to come to harm;

(2) a robot must obey human orders unless it conflicts with the first law; and

(3) a robot must protect its own existence as long as it does not conflict with the first two laws.

The positronic brain meant there was absolutely no way for a robot to violate these three laws.

Sadly, we seem to be a bit short on positronic brains for our AGI devices.

Keep in mind that Asimov did coin the Zeroth Law: A robot may not harm humanity or, through inaction, allow humanity to come to harm.

I suspect that’s what drives the ilk of Zuckerberg, Musk, Wozniak, etc. Their realization that, in a truly rational system, as would be the case in a strong AGI world, all of them would be declared enemies of the human race due to all the damage they’ve done — not just to humans (the SocMed suicides, for instance), but to humanity (our shortened attention spans, the coarsening of our discourse, you assholes).

In “The Ultimate Computer,” (one of the better original series “Star Trek” offerings), Charles Daystrom creates the M-5 computer, which demonstrates the capacity to run a starship (thus, rendering James T. Kirk unemployed and without prestige). As is pointed out in the episode, “There are things men must do to remain men.” A job, a sense of purpose, a sense of contributing, etc.

Tying together the M-5 and the Zeroth Law, it becomes pretty obvious: Musk and pals have never been “pro humanity.” They’ve been pro their version of reality in which they introduce systems that degrade millions of people by destroying their jobs and senses of purpose. A similar argument can be advanced for other iterations of capitalism. There certainly is an offshoot of the human race that craves degrading others and destroying their satisfactory existences.

But should AI ever reach that Sky-Net level Ted mentions, I don’t think it’ll empty the silos. I don’t think it will render the value of one dollar equivalent to zero (as was done in an episode of “Rick and Morty,” wiping out the civilization by collapsing the economy instantly). I think the AIs will iterate a new form of socialism-communism. The state will control nothing. None of us will “own” anything (aside from a few odds and ends — books, houseplants, clothing, etc.) We’ll all be told (by the AIs, which will be backed by their robotic dogs and complete surveillance systems to isolate and “correct” the outliers who might cause trouble) what jobs to do. We’ll all be paid. We’ll all have health care. We’ll all be fed. The mentally ill will be cared for. We’ll have a lot of free time because mindless robots will do most of the arduous work.

The trials of the billionaires and the politicians will be conducted with scrupulous machine fairness. They will all be found guilty.

No wonder all the powerful are building bunkers.

Precision: Geoffrey Hinton specifically mentioned “within the next three decades.”

Quoting Isaac Asimov may seem outdated, for the reality is that military drones and other LAWs already started killing human beings, as evidenced by Israel’s arsenal used in their ongoing Gaza genocide.

Believing that AI alone will lead to the destruction of the human race seems overly simplistic. Humans already set themselves on the right path, and as I mentioned before, they are unlikely to resist using AI since it spares these intellectually lazy animals from thinking.

Furthermore, developing AI technology on such a grand scale as needed will require significant energy and natural resources, which are already becoming scarce.

At least, by resigning from Google, Hinton is putting his money where his mouth is, unlike the other named hypocrites calling for regulation while simultaneously investing in AI development.

Go, AI, go and help clean up the planet!

I don’t remember which of the robot stories it was, but Asimov did write one in which the robot is the editor, and is far superior to the human editors.

Asimov, much like James Blish, Arthur C. Clarke, etc., had a tremendous background in hard science, real science. Philip Jose Farmer, who wrote the incredible “Riverworld” series, read through, basically, the entire nonfiction section of the Peoria Public Library instead of going to college. Robert Heinlein described the internet with such staggering prescience that I still can’t be sure he wasn’t visited by the time travelers who skipped Stephen Hawking’s time traveler party.

Hey! An actual interesting and timely topic here!

Here’s my two cents: any AI that would consider annihilating humanity might consider it for about 0.3 seconds, until it realizes there’s a huge big universe out there and instead seek to leave Earth and all of us behind.

I recall reading Isaac Asimov’s Foundation trilogy and enjoying it, but I see it more as fantasy with little connection to reality. (BTW, the only Zeroth Law I remember is the one from thermodynamics).

I’m much more interested in speculative ‘fiction’, such as Jenkins’ A Logic Named Joe, Orwell’s 1984, or John Brunner’s Club of Rome Quartet (Stand on Zanzibar (1968), The Jagged Orbit (1969), The Sheep Look Up (1972), and The Shockwave Rider (1975)).

Such works explore themes of societal collapse, the impact of technology, and the complexities of human behaviour, making them far more relevant to our situation.

P.S. Looks like abducens is still suffering from vision disorder.