Last week’s EU court ruling ordering Google and other search engines (there are other search engines?) to process requests from European citizens to erase links to material about them is being criticized by techno-libertarians. Allowing people to clean up what has become the dreaded Permanent Record That Will Follow You the Rest of Your Life, they complain, creates an onerous inconvenience to tech companies, amounts to censorship, and infringes upon the free flow of information on the Internet.

Even if those concerns were valid — and they’re not — I’d agree with the European Court of Justice’s unappealable, final verdict in the case of Mario Costeja González, a Spanish national who asked that a Google link to a property foreclosure ought to be deleted since the debt had since been paid off and the matter has been resolved. He did not request, nor did the court rule, that the legal record itself, which dated back to 1998, be expunged from cyberspace — merely that he ought not to suffer shame or embarrassment for his former financial difficulties every time an acquaintance or potential employer types his name into a browser, for the remainder of his time on earth, and beyond.

Offline, the notion that people deserve a fresh start is not a radical concept; in the United States, even unpaid debts vanish from your credit report after seven years. So much stuff online is factually unreliable that “according to the Internet” is a joke. There are smears spread by angry ex-lovers, political enemies, bullies and other random sociopaths. The right to eliminate such material from search results is long overdue. It’s also not without cyber-precedent: after refusing to moderate comments by “reviewers,” many of whom had not read the books in question, Amazon now removes erroneous comments.

The ruling only affects Europe. But Congress should also introduce the U.S. Internet to the joys of forgetting. Obviously, obvious lies ought to be deleted: just last week, conservative bloggers spread the meme that I had “made fun of” the Americans killed in Benghazi. I didn’t; not even close. Google can’t stop right-wingers from lying about me, but it would sure be nice of them to stop linking to those lies.

But I’d go further. Lots of information is accurate yet ought to stay hidden. An unretouched nude photo is basically “true,” but should it be made public without your consent?

We Americans are rightly chastised for our lack of historical memory, yet society benefits enormously from the flip side of our forgetfulness — our ability to outgrow the shame of our mistakes in order to reinvent ourselves.

“More and more Internet users want a little of the ephemerality and the forgetfulness of predigital days,” Viktor Mayer-Schönberger, professor of Internet governance at the Oxford Internet Institute, said after the EU court issued its decision. Whether your youthful indiscretions include drunk driving or you had an affair with your boss as an intern, everyone deserves a second chance, a fresh start. “If you’re always tied to the past, it’s difficult to grow, to change,” Mayer-Schönberger notes. “Do we want to go into a world where we largely undo forgetting?”

Ah, but what of poor Google? “Search engine companies now face a potential avalanche of requests for redaction,” Jonathan Zittrain, a law and computer science professor at Harvard, fretted in a New York Times op/ed.

Maybe. So what?

Unemployment, even among STEM majors, is high. Tech companies have been almost criminally impecunious, hiring a small fraction of the number of employees needed to get the economy moving again, not to mention provide decent customer service.

Google has fewer employees than a minor GM parts supplier.

Would it really be so terrible for Google to hire 10,000 American workers to process link deletion requests? So what if lawyers make more money? They buy, they spend; everything trickles down, right? Google is worth more than Great Britain. It’s not like they can’t afford it.

Onerous? Google has a space program. It is mapping every curb and bump on America’s 4 million miles of roads.

They’re smart. They can figure this out.

“In the United States, the court’s ruling would clash with the First Amendment,” the Times reported with an unwarranted level of certitude. But I don’t see how. The First Amendment prohibits censorship by the government. Google isn’t a government agency — it’s a publisher.

This is what the EU story is really about, what makes it important. In order to avoid legal liability for, among other things, linking to libelous content, Google, Bing and other search engines have always maintained that they are neutral “platforms.” As Zittrain says, “Data is data.” But it’s not.

Google currently enjoys the liberal regulatory regime of a truly neutral communications platform, like the Postal Service and a phone company. Because what people choose to write in a letter or say on the phone is beyond anyone’s control, it would be unreasonable to blame the USPS or AT&T for what gets written or said (though the NSA would like to change that).

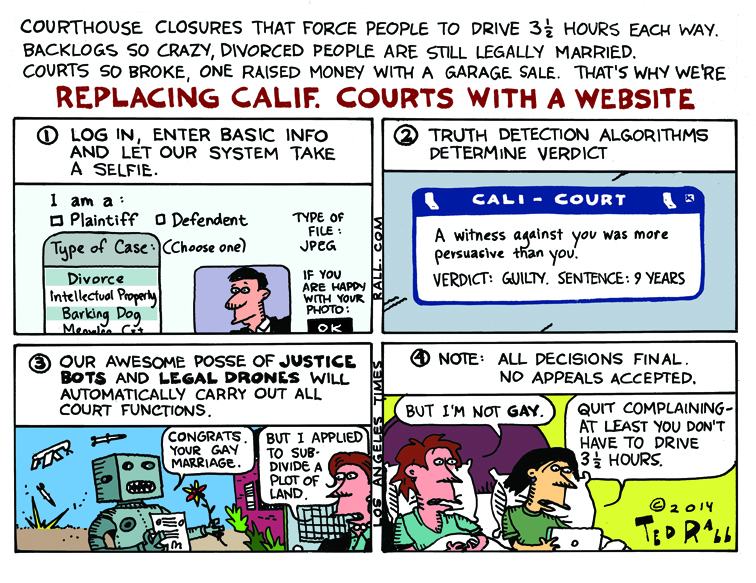

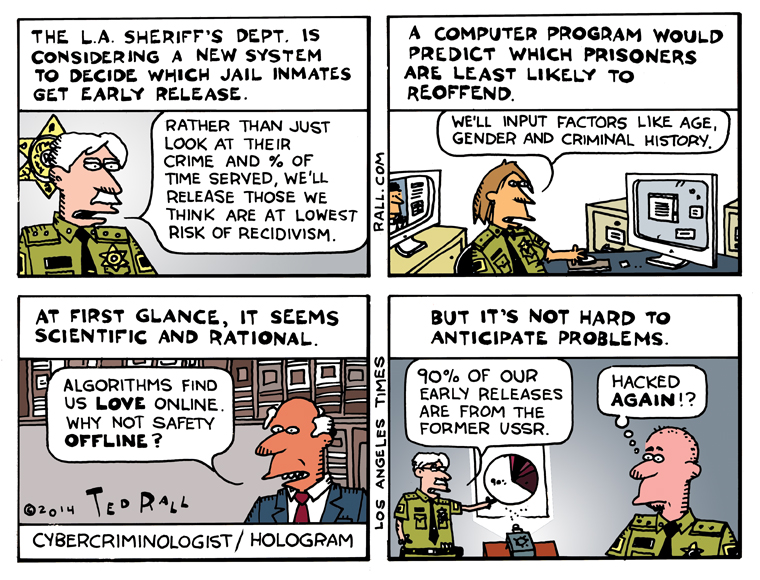

Google-as-platform is, and always was, a ridiculous fiction. Search results, Google claims, are objective. What comes up first, second and so on isn’t up to them. It’s just algorithms. Data is data. The thing is, algorithms are codes. Computer programs. They’re programmed by people. By definition, coders decide.

Algorithms are not, cannot be, and never will be, “objective.”

That’s just common sense. But we also have history. We know for a fact that Google manipulates searches, tweaking their oh-so-objective algorithms when they cough up results they don’t like. For example, they downgrade duplicated content — say, the same essay cut-and-pasted across multiple blogs. They sanction websites that try to game the system for higher Google listings by using keywords that are popular (sex, girls, cats) but unrelated to the accompanying content.

They censor. Which makes them a publisher.

You probably agree with a lot of Google’s censorship — kiddie porn, for example — but it’s still censorship. Deciding that some things won’t get in is the main thing a publisher does. Google is a publisher, not a platform. This real-world truth will eventually be affirmed by American courts, exposing Google not only to libel lawsuits but also to claims by owners of intellectual property (I’m talking to you, newspapers and magazines) that they are illegally profiting by selling ads next to the relevant URLs.

Although the right to censor search results that are “inadequate, irrelevant or no longer relevant” (the words of the EU ruling) would prevent, say, the gossip site TMZ from digging up dirt on celebrities, there would also be a salutary effect upon the free exchange of information online.

In a well-moderated comments section, censorship of trolls elevates the level of dialogue and encourages people who might otherwise remain silent due to their fear of being targeted for online reputation to participate. A Google that purges inadequate, irrelevant or no longer relevant items would be a better Google.

(Support independent journalism and political commentary. Subscribe to Ted Rall at Beacon.)

COPYRIGHT 2014 TED RALL, DISTRIBUTED BY CREATORS.COM